Arabic Algerian Sign Language Translation System Based on 3D Avatar Technology

Research article  Open access |

Available online on: 27 April, 2025 |

Last update: 27 April, 2025

Open access |

Available online on: 27 April, 2025 |

Last update: 27 April, 2025

Abstract

This work focuses on the creation of tools to help deaf people learn Arabic Algerian Sign Language (ALSL), specifically an automatic translation system with a 3D avatar for accurate and dynamic sign representation. The system encodes ALSL signs using the Notation System Method (NSM), which is principally based on the Hamburg Notation System (HamNoSys).

A shallow analysis of the linguistic complexity of ALSL is offered, as well as the obstacles inherent in developing technological solutions to facilitate proper translation. To ensure correctness, the development approach involved working with ALSL translation experts at each level. The system’s lexicon, consisting of 417 words, achieved a certified accuracy rate of 75.53%. These results represent a substantial step forward in 3D avatar-based sign language simulation, with opportunities for further refinement and development.

Keywords- Arabic Algerian Sign Language, 3D Avatar, HamNoSys, Deaf Community, Translation System.

Introduction

The rise of digital technology has created unprecedented opportunities for removing communication obstacles, promoting inclusivity, and accessibility. Closing the communication gap between the deaf and hearing communities is a critical area where technology has the potential to influence substantial change. Sign languages, which are visual languages defined by hand gestures, body movements, and facial emotions, are integral to the daily existence of millions of deaf individuals globally. Nonetheless, disparities among sign languages, coupled with a deficiency in universal comprehension, can obstruct efficient communication between sign language users and individuals unacquainted with these languages.

This study details the creation of an automated translation system adapted for Arabic Algerian Sign Language (ALSL) utilizing 3D avatar technology. The project seeks to address communication deficiencies for ALSL users by providing a 3D avatar-based solution that dynamically and precisely depicts ALSL signs, utilizing sophisticated systems engineering and web technologies. This dynamic translation technology offers a compelling user experience and guarantees the accurate visual depiction of ALSL, improving accessibility for both deaf and hearing users.

The document is organized to assist readers in understanding the creation of a 3D avatar-based translation system for Arabic Algerian Sign Language (ALSL). The document commences with an overview of sign languages, emphasizing the historical context and structural aspects of Algerian Sign Language, thereafter presenting a literature analysis on progress in sign language translation and 3D avatar technology. The system design portion outlines the architecture, objectives, and critical design choices for developing an accessible platform, whereas the implementation section elaborates on the technical procedures, encompassing hardware, software, and motion capture for gesture animation. Each part develops upon the previous one, offering a unified strategy for improving communication within Algeria’s deaf community.

Background

Sign language has been officially recognized as the principal language of the deaf in Algeria, as mandated by a law passed on May 8, 2002. The Algerian Sign Language (ALSL) derived from the French Sign Language (Langue des Signes Française, LSF).

ALSL’s history and evolution highlight its regional variances and lack of uniformity. The language’s non-uniformity distinguishes it from others and warrants further study to understand regional variances and their impact on the overall language.

Some ALSL variations include: Algerian Sign Language of Laghouat, which is used by many deaf individuals in the province and adjacent areas, including cities and villages [13]; Algerian Sign Language of Oran is widely used by the Deaf community in northern Algeria, especially in Oran [11]; Adrar, located in southern Algeria, has a Deaf community that uses Algerian Sign Language [15]. In 2017, the National Foundation for Media Contact in Algeria produced the first Algerian Sign Language Dictionary [17]. The dictionary includes images of sign language vocabulary accompanied by captions in Arabic and French. It lacks grammar rules and structured phrases, but instead uses Arabic grammar and structure to express meaning in sign language[17].

3D virtual avatars are increasingly used to meet the educational needs of deaf and hearing-impaired students who often struggle with spoken language literacy [5]. Sign languages are essential for their communication, enabling interaction within their communities and with hearing individuals [23]. To support social integration, sign language interpretation systems have been developed to convert text and speech into signed language through 3D avatars, facilitating education, accessibility, and research [25]. These systems also aid in documenting and preserving sign languages for future generations [23, 25].

A 3D avatar is a three-dimensional recreation of a real or imagined The character in the digital world. An artist can create this type of computer-generated character from the base up or replicate it as a scanned model of an individual. A 3D human avatar typically consists of a geometric mesh in a neutral pose with textures and a skinning mechanism for movement simulation. Essential elements like materials, textures, and accessories (clothing, hair) add realism.

Recent developments in 3D technology have enabled the creation of detailed 3D avatars that have applications across various fields, such as gaming, virtual environments, fashion, healthcare, and the military. These avatars enable customization for individual needs, from sizing to specific functionalities and advancing applications like disease diagnosis [2, 6].

The 3D avatar ecosystem uses file formats like FBX (Autodesk), OBJ (Wavefront), and glTF (Khronos Group) to exchange geometry and animation data. Advances in laser scanning, white light scanning, photogrammetry, and machine vision have enabled high- accuracy 3D data capture, supporting fields from biomedical engineering to film production [9].

Related works

Researchers have made significant advances in designing systems to animate and translate sign languages, utilizing a variety of technologies and approaches. Bouzid et al. developed a web-based ASL system that uses SignWriting Markup Language and low-poly 3D avatars for visual representation [3]. Othman et al. contributed significantly to ASL transcription by creating a new XML-based machine translation model [21].

Kaur et al. used SiGML technology and the JA Signing App to translate Indian Sign Language words [12]. Punchimudiyanse et al. created a Python and Blender application to animate words in Sinhala Sign Language (SSL) without the need for video or motion capture[22].

Gonçalves et al. used C++ and the Irrlicht animation engine to produce 3D avatar animations for Brazilian Sign Language [10]. Da et al. used the Hamburg Notation System to translate Viennese sign language into 3D animations for television news [4].

Al-Barahamtoshya et al. created a system for translating Arabic text to Arabic Sign Language (ArSL) that included a speech module and transformational rules [1]. Luqman et al. developed an Arabic Sign Language Gloss System to efficiently express Arabic signs and words [14].

Brour et al. used video sequences and a rule-based system to accurately depict Arabic Sign Language [16].

The Mada Assistive Technology Center created the BuHamad Project, Qatar’s first virtual 3D sign language avatar for Qatari Sign Language (QSL). This project integrates AI and motion capture to deliver real-time translation from Arabic text into QSL. It supports online machine translation and enables expanded accessibility for the deaf community in Qatar by allowing digital interactions across sectors [20]. In related work, [19] investigate the acceptance of signing avatars, specifically the culturally modified BuHamad avatar, among Deaf and Hard of Hearing individuals in Qatar. Results indicate that participants predominantly possess favorable sentiments regarding the signing avatar and its capacity to improve accessibility.

Proposed methodology

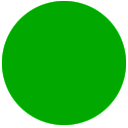

Our approach focuses on developing a comprehensive lexicon for Algerian Sign Language (ALSL), as illustrated in Figure 1. This dictionary is constructed from a pre-existing dataset of sign language videos annotated with HamNoSys notation. Utilizing the linguistic similarities between ALSL and French Sign Language (LSF), we right away import HamNoSys notations from the dataset for words common to both languages. ALSL experts assist the manual adding of new entries and the editing of Arabic terms absent from the DictaSign dataset, so ensuring accuracy and comprehensiveness.

Figure 1. The ALSL dictionary construction process

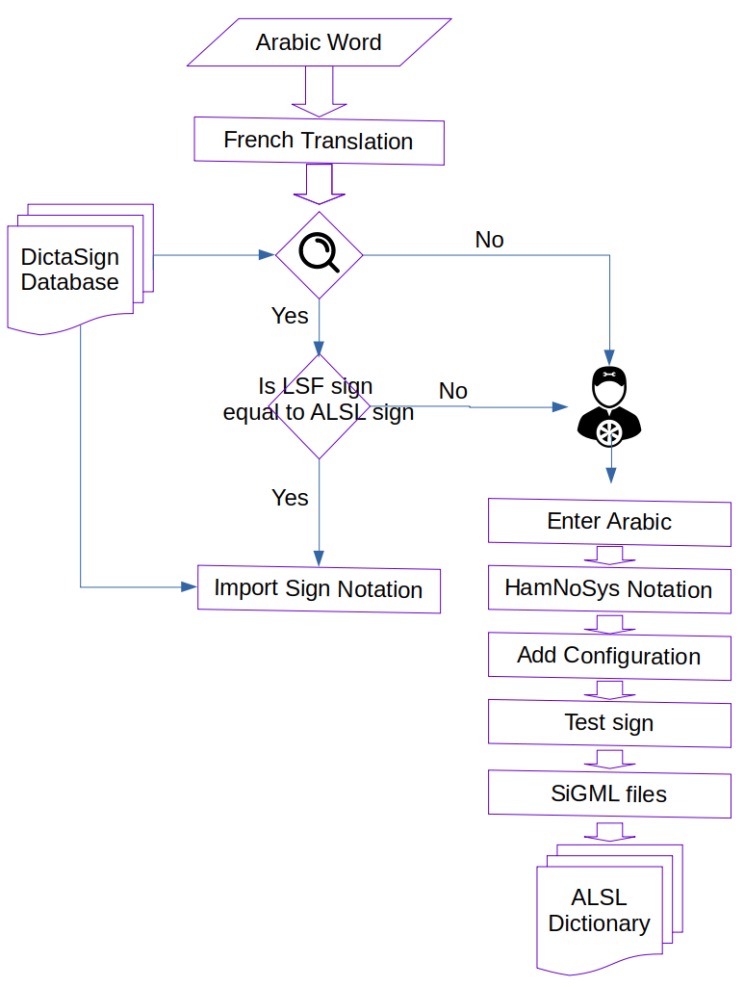

The subsequent stage entails transforming the assembled HamNoSys notations into SiGML, a markup language suitable for 3D avatar rendering. ALSL specialists assess the resultant lexicon to confirm its accuracy. Furthermore, we established a system for converting Arabic text into 3D ALSL animations, with the system architecture shown in Figure 2. This system firstly preprocesses the text, conducts a glossary lookup in the ALSL lexicon, and finally translates the appropriate SiGML notation into a 3D avatar movement. This integrated methodology of dictionary building and system development seeks to establish a dynamic and accessible translation platform for Arabic-speaking ALSL users.

Figure 2: System architecture

Implementation

The following section provides a comprehensive overview of our development process of Automated Arabic Algerian Sign Language (ALSL) Translation System, highlighting the challenges faced and the solutions implemented.

Development Tools

The Notation System Method (NSM) involves the use of programming languages such as JavaScript, HTML, and CSS. Here we cite tools used to implement Notation System Method (NSM): for ALSL words translation into 3D avatar are:

- HamNoSys: The Hamburg Notation System (HamNoSys) is used for encoding sign language gestures [18].

- SigML: The Sign Gesture Markup Language (SigML) converts HamNoSys notations into animations displayed by the 3D avatar. This bridge between textual descriptions and visual representation ensures accurate and consistent gesture animations [8].

- eSIGN Editor: This tool is used to create and manage sign language dictionaries, vital for ensuring the accuracy and comprehensiveness of the ASL gestures performed by the avatar [27].

- CWASA SIGML Player: SIGML Player is a tool used to animate sign language data encoded in SiGML (Signing Gesture Markup Language). This player forms part of the broader CWASA (CWA Signing Avatars) system, which synthesizes natural sign language performance using virtual human avatars.

- CWASA Virtual Signing System: The CWASA system enhances the visual signing capabilities of the avatar, allowing it to perform complex ASL gestures fluidly and naturally. This system ensures that the gestures are lifelike and expressive, adhering to the nuances of sign language [26].

Solution steps

This subsection provides a comprehensive description of the implementation of our proposal utilizing the Notation System Method (NSM). We outline each methodological step and supply specifics regarding the approaches used in each developmental phase, covering:

- Data Collection: Collecting and structuring the necessary data to develop a precise translation system.

- ALSL Translation Dictionary building: Developing a dictionary designed for Arabic Algerian Sign Language (ALSL) to enhance translation ability.

- Translating HamNoSys to SiGML: Converting HamNoSys notation into SiGML, a format suitable for 3D avatar rendering.

- Text Processing: Analyzing input text to ensure precise translation and presentation.

- User Interface (UI) Derived from CWASA: Creating an intuitive interface based on the CWASA architecture to improve accessibility and engagement.

Data Collection

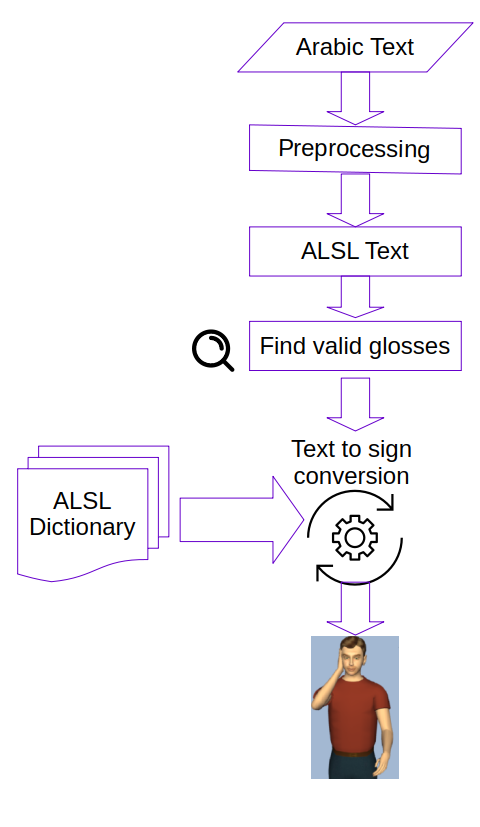

- Data Sources: The approach primarily relied on the DictaSign dataset. This corpus includes 1,000 lexical items translated into four different sign languages, with our research focusing on the equivalents in French Sign Language. Collaborating with a team of experts, we translated over 300 words from the dataset, aligning sign performances between Algerian Sign Language and French Sign Language [7].

- The dataset provides video clips of gestures for each word along with notations in HamNoSys notation, for example, in Figure 3, the word “give up” in French (LSF).

Figure 3: LSF representation in a video & in HamNoSys for the word “Abondonner”

We used videos of native performers of Algerian Sign Language for words that are not included in the DictaSign dataset. For example, we sourced videos from the َ”Algerian sign language Facebook page”[24], which regularly showcases Arabic words performed alongside their corresponding signs in Algerian Sign Language as showed in figure 4.

Figure 4: Algerian Sign Language gestures of ”Eid al-Adha” عيد الأضحى

- ALSL Translation Dictionary Creation

In this stage, the focus is on the first step of building the ALSL dictionary, which involves translating vocabulary entries (representing Arabic words) based on HamNoSys system using eSign editor.

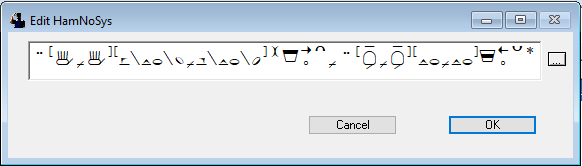

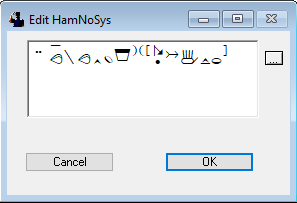

The figure 5 shows a HamNoSys Translation for the word “أسرة” in ALSL using the manual translation method, and the figure 6 shows the imported HamNoSys Translation for the word “give up (Abandonner in french)” in LSF using the importing method.

Figure 5: Manual HamNoSys Translation for the word “أسرة” in ALSL

Figure 6: The imported HamNoSys Translation for the word “(Give up) Abandonner” in LSF

Testing and Validation After incorporating the Arabic word into the dictionary, we assessed the avatar’s performance in sign language motion using HamNoSys encoding associated with the word. This evaluation was conducted through the ‘Sign’ function in the eSign editor, presenting results via the CWASA Sigml player application. Subsequently, we validated translation accuracy by comparing video recordings of native Algerian Sign Language signers or by soliciting feedback from experts in Algerian Sign Language.

- Translating HamNoSys to SIGML

After completing the first step of building the dictionary, the translation data in this phase is saved in the form of SiGML files based on HamNoSys , which is readable by the 3D avatar. When describing the process of translating HamNoSys annotations into SiGML files and the avatar’s interaction with these files. The HamNoSys notation is converted into SiGML, an XML-based format, by matching symbols to corresponding SiGML tags. This format is used to create 3D rendering software for sign language animation. The SiGML file is then used to animate gestures through a virtual avatar, controlled by the CWASA SiGML Player App.

The CWASA system uses JavaScript and WebGL technologies to translate SiGML into animations for real-time virtual avatars on web-based applications. Client applications send SiGML data via TCP/IP to the server, which processes it to control the avatar’s movements. CWASA can also use XMLHttpRequest to dynamically fetch SiGML files or update avatar settings, enhancing user interaction and ensuring effective rendering of sign language animations.

- Text Processing

To minimize issues for users, in this stage, we relied on developing specific algorithms to process Arabic textual inputs. The arabic text is preprocessed by using regular expression to clean text. And the use of Sliding Window Algorithm to address the issue of accurately recognizing phrases such as “بعد غد” (“the day after tomorrow”) in Arabic text inputs, which are separated by spaces and thus might be incorrectly treated as separate entities, we employ the sliding window algorithm.

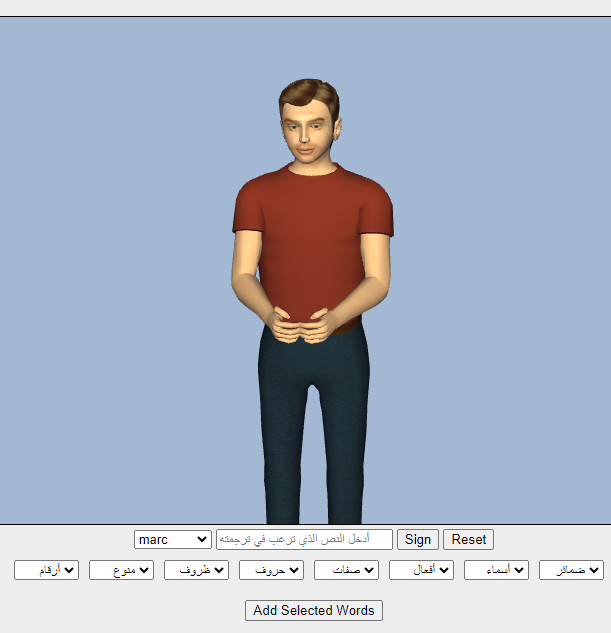

- UI Based on CWASA

User Interface Design The user interface (UI) for the Automated Arabic-Algerian Sign Language Translation System is designed to be intuitive and user-friendly, allowing users to input Arabic text and view the corresponding Algerian Sign Language (ALSL) signs performed by a 3D avatar. A CWASA (Character and Word Alignment and Signing Avatar) is integrated into the UI to render the 3D avatar’s sign language animations (cf. figure 7).

Figure 7: Arabic Algerian 3D Avatar Translator System UI Based on CWASA

Tests

The ALSL Translation System deployed on the website [1] so users could test it and see all its different pieces. Work was conducted in collaboration with specialists from the School for Deaf Children in Beni Slimane, who deal with Algerian Sign Language translations, and the association of Bouira province, which specializes in sign language translation.

Problems in synchronization were detected at the integration test level for interaction between the translation engine and 3D avatar animation. Still, these were resolved by optimizing the communication protocols among the various modules. It is confirmed that the flow from Arabic text input to 3D avatar animation is smooth with the correct sign displays.

User acceptance testing affirmed the interface’s ease of use, with clear translations and a reasonable translation time. User comments led to changes to the control panel layout. All component-level tests passed, with initial issues rectified by improving input-handling code. Module optimization eliminated synchronization issues between the translation engine and the 3D avatar animation.

To ensure the accuracy and cultural relevance of the translation, we collaborated closely with experts in Algerian Sign Language (ALSL). This phase involved rigorous testing of the translation system using a comprehensive glossary of ALSL vocabulary. The experts’ feedback was crucial for refining the system and improving its accuracy.

The following table (Table 1) displays the results of the system tests based on the feedback from experts in Algerian Sign Language translation.

The Overall Accuracy of our ALSL translation system = 75.53%.

Table 1. Dictionary evaluation

| Categories | Expert

test |

Correct | Percent | Incorrect translations |

| Pronouns | ok | 3/3 | 100% | – |

| Nouns | ok | 219/227 | 96.48% | أستاذ ألم، أم، إشارة، اقتصاد، امرأة، يونيو، مكتبة |

| Verbs | ok | 76/78 | 97.44% | لا يعلم، يأخذ |

| َAdjectives | ok | 40/44 | 90.91% | آخر، صعب، مريض |

| Tools | ok | 11/12 | 91.67% | كيف |

| َAdverbs | ok | 14/17 | 82.35% | أمام، تحت، صباح |

| Miscellaneous | ok | 11/15 | 73.33% | فقط، مرة أخرى، مرحبا، من فضلك |

| Numbers | ok | 19/21 | 90.48% | 40, 90 |

| Total | 315/417 | 75.53% |

Conclusion

The Automated Arabic Algerian Sign Language Translation System is a big step forward in enhancing communication among Algeria’s Deaf community. Using the Notation System Method (NSM), this study successfully developed a program that translates Arabic text into accurate and fluid ALSL motions performed by a 3D avatar.

The built dictionary, comprising 417 words, attained a verified accuracy rate of 75.53%. These results indicate a considerable step forward in 3D avatar-based sign language simulation, with prospects for further refinement and growth.

Several obstacles were addressed during the implementation, including the lack of high-quality ALSL datasets, the intricacy of motion capture technologies, and the complexities of effectively translating and animating sign language gestures.

In the future, we recommend focusing on expanding the ALSL dataset, enhancing the realism and precision of avatar animations, and optimizing system performance across various platforms. Collaboration with the ALSL community, along with further enhancement of the user interface, will be necessary to maintain the system’s relevance and efficacy.

Acknowledgments.

We sincerely thank Mr. Youcef Benyahia for his expert contributions to our 3D avatars and animations. Special thanks to Mrs. Dharbou Maroua, whose dedication as a sign language specialist greatly enhanced the quality of over 300 Algerian Sign Language (ASL) gestures. We are also grateful to the teachers of the school for the deaf in Beni Slimane, Medea, for their essential feedback during prototype testing.

References

- Al-Barahamtoshy, O. H. and Al-Barhamtoshy, H. M. (2017). Arabic text-to-sign (artts) model from automatic sr system. Procedia Computer Science, 117:304–311.

- Berdic, N., Mihic, S., and Dragan, D. (2016). 3d full body avatar applicability in consumer products. In The proceedings of international conference on Mass Customization and Personalization in Central Europe MCP-CE, pages 24–29.

- Bouzid, Y. and Jemni, M. (2013). An avatar based approach for automatically interpreting a sign language notation. In 2013 IEEE 13th International Conference on Advanced Learning Technologies, pages 92–94. IEEE.

- Da, Q. L., Khang, N. H. D., and Ngon, N. C. (2019). Converting the vietnamese television news into 3d sign language animations for the deaf. In Industrial Networks and Intelligent Systems: 14th EAI International Conference, INISCOM 2018, Da Nang, Vietnam, August 27–28, 2018, Proceedings, pages 155–163. Springer.

- Deusens (2024). Avatars with motion capture technology for businesses. Accessed: 2024-05-27.

- Duarte A., Palaskar S., V. L. G. D. D. K. M. F. T. J. and i Nieto X., G. (2021). How2sign: A large-scale multimodal dataset for continuous american sign language. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2735–2744.

- Efthimiou, E., Fotinea, S.-E., Hanke, T., Glauert, J., Bowden, R., Braffort, A., Collet, C., Maragos, P., and Lefebvre-Albaret, F. (2012). Sign language technologies and resources of the dicta-sign project. In 5th Workshop on the Representation and Processing of Sign Languages: Interactions between Corpus and Lexicon. Satellite Workshop to the eighth International Conference on Language Resources and Evaluation (LREC-2012).

- Elliott, R., Glauert, J., Jennings, V., and Kennaway, R. (2004). An overview of the sigml notation and sigmlsigning software system. sign-lang@ LREC 2004, pages 98–104.

- Gachanja Wanjiru, B. (2022). Analysis of methods for creation of human 3D avatars. PhD thesis, ETSI_Informatica.

- Gonçalves, D. A., Todt, E., and Sanchez Garcia, L. (2015). 3d avatar for automatic synthesis of signs for the sign languages. In WSCG 2015 Conference on Computer Graphics, Visualization and Computer Vision. Václav Skala-UNION Agency.

- Julien, T. (2009). Une vue d’ensemble de la reconnaissance de gestes. In Séminaire Gesture recognition.

- Kaur, K. (2016). Hamnosys to sigml conversion system for sign language automation. Procedia Computer Science, 89:794–803.

- Lanesman S., M. I. (2012). The survival of algerian jewish sign language alongside israeli sign language in israel. anthropological and linguistic insights. Sign Languages in Village Communities.

- Luqman, H. and Mahmoud, S. A. (2019). Automatic translation of arabic text-to-arabic sign language. Universal Access in the Information Society, 18(4):939–951.

- Mansour, M. S. (2007). Langage et surdité, descriptive de la langue des signes des sourds oranais (magistère). Université d’Oran Es-Sénia., page 124.

- Mb, A. and Ab B, A. M. (2019). 1: Arabic text language into arabic sign language machine translation system-sciencedirect. Procedia Computer Science, 148:236–245.

- Núñez-Marcos, A., Perez-de Viñaspre, O., and Labaka, G. (2023). A survey on sign language machine translation. Expert Systems With Applications.

- of Sciences, A. and in Hamburg, H. (2024). Dgs-korpus project. https://web.dgs-korpus.de/hamnosys-97.html. [Online; accessed 19-June-2024].X

- Othman, A., Dhouib, A., Chalghoumi, H., Elghoul, O., and Al-Mutawaa, A. (2024). The acceptance of culturally adapted signing avatars among deaf and hard-of-hearing individuals. IEEE Access.

- Othman, A. and El Ghoul, O. (2022). Buhamad: The first qatari virtual interpreter for qatari sign language. Nafath, 6(20).

- Othman, A. and Jemni, M. (2017). An xml-gloss annotation system for sign language processing. In 2017 6th International Conference on Information and Communication Technology and Accessibility (ICTA), pages 1–7. IEEE.

- Punchimudiyanse, M. and Meegama, R. G. (2015). 3d signing avatar for sinhala sign language. In 2015 IEEE 10th international conference on industrial and information systems (ICIIS), pages 290–295. IEEE.

- Ready Player Me (2024). Animation, motion capture, and ai software: 3d full body avatar creator. Accessed: 2024-05-27.

- sign-language page, A. (2024). Algerian sign language page on facebook. https://www.facebook.com/profile.php?id=100093996740140. [Online; accessed 19-June-2024].X

- Top AI Tools (2024). Motion capture tools. Accessed: 2024-05-27.

- University of East Anglia (2024a). CWA Signing Avatars. https://vh.cmp.uea.ac.uk/index.php/CWA_Signing_Avatars. [Online; accessed 19-June-2024].X

- University of East Anglia (2024b). esign project. https://www.visicast.cmp.uea.ac.uk/eSIGN/index.html. [Online; accessed 19-June-2024].X

- VisiCast Project (2024). Driving the SiGML player app. https://vh.cmp.uea.ac.uk/index.php/Driving_the_SiGML_Player_App. Accessed: 2024-06-22.X