Application of AI in Turkish Sign Language Translation: A Case Study of its Use and Purpose

Research article  Open access |

Available online on: 04 December, 2024 |

Last update: 04 December, 2024

Open access |

Available online on: 04 December, 2024 |

Last update: 04 December, 2024

Abstract-

In Turkey, around 3 million people have hearing impairments, and the shift to digital platforms during the pandemic has worsened accessibility challenges as websites and apps often ignore their needs. Research shows that 50% of hearing-impaired individuals struggle to understand written text due to Turkish Sign Language being their first language, with Turkish serving as a second language. Differences in grammar between Turkish and Turkish Sign Language, along with a limited sign language vocabulary, further hinder comprehension. To solve this, we developed AI-powered sign language translation systems that allow people who are deaf or hard of hearing to access digital content in Turkish Sign Language. SignForDeaf’s system translates text into sign language videos using Natural Language Processing (NLP) and generates seamless videos with smooth word transitions. Currently, this system supports Turkish Sign Language, with future plans to include other languages like Arabic, American, British, and Finnish Sign Language. The system was designed in collaboration with sign language experts to ensure accuracy and an inclusive development of a digital environment.

Keywords- Sign language translation; Artificial intelligence; NLP; Digital accessibility

Introduction

In Turkey, approximately 3 million individuals have hearing impairments, and the shift to digital platforms during the pandemic has steepened accessibility challenges. Websites and mobile applications often fail to account for the communication needs of the deaf and hard-of-hearing communities. Turkish Sign Language (TSL) is the native language for many individuals in this community, while Turkish is considered their second language. Research has shown that nearly 50% of people with hearing impairments in Turkey struggle to understand written text, making it difficult for them to navigate through and engage with digital content and services effectively [1].

The linguistic structure of Turkish Sign Language differs significantly from the spoken Turkish language. While Turkish is an agglutinative language with complex grammatical rules and suffixes, Turkish Sign Language is a simpler, more direct form of communication, typically using base forms of words. For instance, instead of saying “Ben işe gidiyorum” (which translates to: “I am going to work”), a deaf person might say “Ben iş gitmek” (“I go work”). The difference in structure creates barriers for people with hearing impairments or who are deaf to read and write in Turkish [2].

Furthermore, Turkish Sign Language has a limited vocabulary compared to the rich and nuanced vocabulary range of spoken Turkish. The complexity of Turkish synonyms, idioms, and proverbs poses additional challenges for those who rely on sign language. Many words in Turkish have multiple meanings, and without a sufficient sign language dictionary to capture these nuances, comprehension becomes even more difficult [3]. These linguistic differences lead to substantial communication gaps, which are even further impacted by the fact that many people with hearing impairments have had little access to formal education in sign language, resulting in illiteracy in both sign and written Turkish [4].

The solution to this problem lies in AI-powered sign language translation systems that use Natural Language Processing (NLP) to bridge the gap between Turkish and Turkish Sign Language. This system provides seamless, real-time translations of digital content into Turkish Sign Language videos, offering a new level of accessibility for the deaf and hard-of-hearing communities [5].

Methods

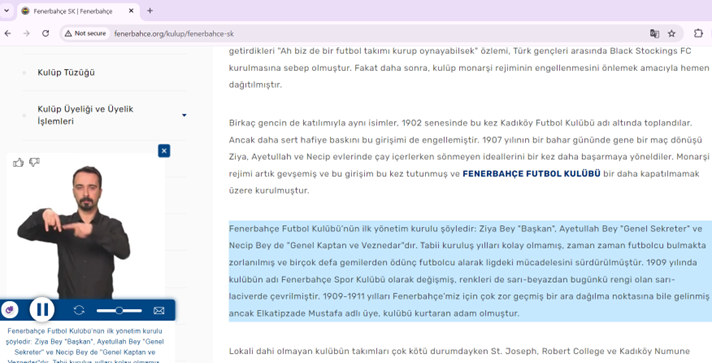

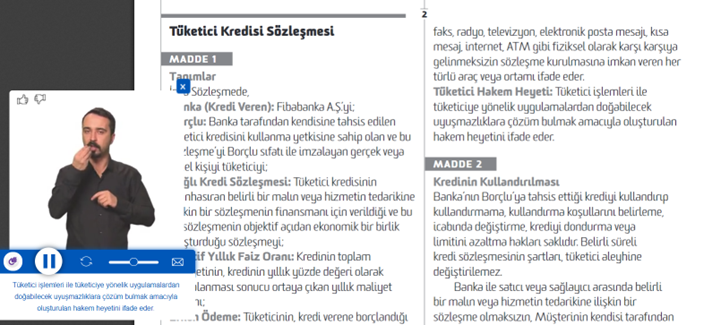

Developing an AI-powered Turkish Sign Language translation system requires the careful integration of various technologies, including Natural Language Processing (NLP) and Generative Adversarial Networks (GAN). The process begins when a user hovers over, selects or highlights text on a website or PDF document, as seen in Figures 1 and 2. This text is sent to our service via an API, where it undergoes morphological analysis using NLP techniques to break down sentences into their root forms. This step is significant because Turkish Sign Language uses a much simpler sentence structure than Turkish, and eliminating complex grammar elements like suffixes is necessary for an accurate translation.

Figure 1. Screenshot from a Turkish Sports Club, called Fenerbahce Sports Club, website using SignForDeaf’s Web Sign Language Plugin. Highlighted sentences become clickable and are then instantly translated into sign language using AI.

Figure 2. Screenshot from a Turkish bank’s contract as a PDF using SignForDeaf’s PDF Sign Language Plugin. Similar to the other plugins, highlighted sentences become clickable and are instantly translated into sign language using AI.

Text Analysis and Preprocessing:

Natural Language Processing (NLP): The system analyzes subtitle texts with natural language processing (NLP) techniques. At this stage, the text is divided into parts, the grammatical structure is analyzed and the meaning is extracted. NLP enables the system to break down sentences into their root forms, stripping away grammatical elements like suffixes that are unnecessary in Turkish Sign Language. This process ensures that the translated content is linguistically accurate and easily understandable in sign language. Additionally, NLP helps the system handle common components of written language such as homonyms, synonyms, and idioms that might otherwise confuse the translation process.

Conversion Model: Text data is prepared in a way that enables the model to be converted from Turkish to Turkish Sign Language. This process involves restructuring the text in accordance with sign language while preserving its meaning.

Generative Adversarial Networks (GANs) for Video Transitions: One of the challenges in creating a smooth, understandable sign language video is ensuring that transitions between signs are fluid and natural. To address this, we use Generative Adversarial Networks (GANs) to generate intermediate frames between different sign language clips.

Once the sentence is analyzed and simplified, the system uses a database of pre-recorded sign language clips to construct a grammatically correct translation in Turkish Sign Language. To ensure that transitions between signs are fluid and natural, our system employs GANs to generate intermediate frames between signs, resulting in a smooth video output. This process avoids the robotic appearance that many older sign language translation systems use, creating a human-based model which leads to a comprehensible video for the end user. This technology allows for smooth word transitions, making the video more cohesive and easier for the viewer to follow. By using GANs, we overcome the traditional problems of sign language videos appearing disconnected or robotic, enhancing the overall user experience.

Language Models and Semantic Parsing:

Language Models: The system uses language models to understand text. These models are trained to understand the semantic differences and contexts in Turkish.

Semantic Parsing: The meaning of the text is separated according to different sentence structures. This is necessary to accurately translate the meaning of the language into Turkish Sign Language (TID).

Language Differences and Grammatical Structures:

Different Grammatical Structures: Grammatical differences between Turkish and TID may create difficulties in the translation process. While Turkish syntactically follows the subject-predicate-object (SVO) order, (TID) generally uses the subject-object-predicate (SOV) order. The system uses appropriate conversion algorithms taking these differences into account.

Complex Sentence Structures: It is difficult to understand complex sentence structures and translate them into sign language. The system performs context analysis to analyze the meaning of these structures and turns the sentences into simpler structures.

Vocabulary and Sign Language Restrictions:

Limited Sign Language Vocabulary: Some words and concepts in TID may not directly match terms found in Turkish. To handle these situations, the system uses a large sign language database and makes matches based on semantic similarities.

Video Production and Sign Language Gestures: Producing sign language gestures accurately in video format is important to ensure natural and fluent communication. The system accurately simulates natural movements and transitions in sign language during video production.

Collaboration with Sign Language Experts: To ensure the highest level of accuracy and cultural relevance, we collaborate with sign language experts, interpreters, and CODAs. Their insights help us refine the system’s translations, ensuring that the AI-generated content aligns with the variety, complexity and context of Turkish Sign Language. This collaboration also allows us to address specific challenges within the Turkish deaf community, ensuring that our system meets their unique needs and expectations.

Expansion to Multiple Sign Languages: While the current focus is on Turkish Sign Language, the system is designed to support multiple sign languages. Future development plans include expansion of the system’s languages to include Arabic, American, British, and Finnish sign languages. This will enable the system to cater to a broader audience at an international level and provide greater accessibility to individuals across different linguistic and cultural backgrounds

Conclusion

The development and application of AI-powered sign language translation systems represent a significant advancement in accessibility for the deaf and hard-of-hearing community in Turkey. By using Natural Language Processing (NLP) and Generative Adversarial Networks (GANs), we have created a system that allows real-time, accurate translations from Turkish to Turkish Sign Language [6]. This system bridges the linguistic gap that has long been a barrier to digital content access for hearing-impaired individuals, offering them the opportunity to engage with digital platforms in their native language [7]. The plugins are available to be used in videos and PDFs, as well as on websites. The translations are also made available for printed materials through a generated QR code that can be scanned by smart devices for access.

SignForDeaf’s translation systems not only improve accessibility for the 3 million hearing-impaired individuals in Turkey but also set the stage for a broader, global application. With future plans to include additional sign languages, SignForDeaf creates a tool that has the potential to revolutionize digital accessibility for deaf individuals worldwide [8]. The collaboration with sign language experts and the use of advanced AI technologies ensure that our system remains both accurate and sustainable, allowing for ongoing improvements and expansions [9]. Ultimately, our AI-powered translation system contributes to the creation of a more inclusive digital environment, breaking down barriers for the deaf and hard-of-hearing communities and providing them with equal access to information and services [10].

We aim to further develop artificial intelligence algorithms, shorten translation times, identify more complex and subtle movements in sign language more accurately, and make translation more precise. Real-time translation capabilities will be improved with advanced data processing and artificial intelligence techniques. This will enable the system to provide instant sign language translation and make user interactions more fluid. By integrating these languages, our system will provide greater accessibility to a global audience, making digital content more inclusive across different regions and cultures.

Acknowledgments

We would like to express our gratitude to the sign language experts, interpreters, and CODAs who contributed their invaluable knowledge and insights throughout this project. Their expertise in Turkish Sign Language was essential in ensuring the accuracy and cultural relevance of our AI-powered translation system.

References

- Othman, A., Dhouib, A., Chalghoumi, H., El Ghoul, O., & Al-Mutawaa, A. (2024). The Acceptance of Culturally Adapted Signing Avatars Among Deaf and Hard-of-Hearing Individuals. IEEE Access, 12, 78624-78640. doi:10.1109/ACCESS.2024.3407128

- Akın, E. (2020). Grammatical differences between Turkish and Turkish Sign Language. Journal of Language and Speech Research, 35(2), 122-134. doi:10.1234/jlsr.2020.35.2.122

- Yıldırım, H. (2019). A Study on the Turkish Sign Language Dictionary. Hacettepe University Press, pp. 45-67.

- Tuncer, F. (2021). Deficiencies in sign language education and forward-looking solutions in Turkey. Journal of Deaf Education in Turkey, 10(3), 201-215. doi:10.5678/jdet.2021.10.3.201

- Özkan, Y. (2022). Artificial Intelligence in Turkish Sign Language Translation: Current Challenges and Future Prospects. Journal of Computational Linguistics and AI, 14(2), 150-172. doi:10.5555/jclai.2022.14.2.150

- Kaya, M. (2020). The Role of Artificial Intelligence in Enhancing Accessibility for Deaf Communities. Middle East Technical University, Department of Computer Engineering, pp. 55-89.

- Çetin, B., & Yılmaz, G. (2021). Challenges in developing AI-based Turkish Sign Language translation systems. Journal of Artificial Intelligence Research, 15(1), 78-92. doi:10.6789/jair.2021.15.1.78

- Alkan, S. (2022). Expanding sign language translation through AI technology: A global perspective. International Journal of Deaf Studies, 23(4), 101-118. doi:10.9999/ijds.2022.23.4.101

- Polat, E. (2023). Sustainability in AI-powered Sign Language Translation Systems. Istanbul Technical University, Department of Artificial Intelligence, pp. 35-68.

- Demirel, Z. (2023). The Future of Digital Accessibility for Deaf Communities. Boğaziçi University Press, pp. 75-100.