The Development of AI-Powered Automatic Video Sign Language Translation Systems

Research article  Open access |

Available online on: 04 December, 2024 |

Last update: 04 December, 2024

Open access |

Available online on: 04 December, 2024 |

Last update: 04 December, 2024

Abstract-

People who are deaf or hard of hearing often face challenges in fully understanding written subtitles in videos due to the differences between sign language and spoken language, each having unique grammar and structures. While many social media platforms provide automatic subtitles, they are often insufficient for accessibility. Sign language interpretation should be included to make video content fully accessible. Traditionally, adding sign language to videos involves a time-consuming process of recording and embedding separate translation videos, which must be redone with any changes to the original video. The plugin simplifies this process by dynamically translating updates directly from subtitle files, significantly reducing the time, effort, and cost involved. It allows seamless sign language support for any video without modifying the original content. The plugin integrates with video players, providing a user-controlled, customizable sign language window that can be activated, moved, resized, or turned off as desired.

Keywords- Sign language translation; Artificial intelligence; NLP; Digital accessibility;

Introduction

The barriers faced by individuals who are deaf or hard of hearing often go beyond auditory challenges, excluding them from essential parts of daily life, especially when it comes to written language comprehension. Spoken and sign languages are inherently different, not only in terms of modality but also in their syntactic structures, grammars, and semantics. This fundamental divergence means that subtitles alone are insufficient in ensuring full accessibility for the deaf and hard-of-hearing communities. Even with the widespread adoption of automatic subtitles across social media platforms and streaming services, many individuals find it challenging to interpret these captions, as they do not fully reflect the grammar or nuances of sign language. Thus, digital accessibility efforts that rely solely on written subtitles overlook an essential aspect of communication— information conveyed through sign language.

Numerous studies have highlighted the importance of sign language in enhancing accessibility for deaf and hard-of-hearing audiences, emphasizing that subtitles, while helpful, are not a substitute for sign language interpretation [1]. For instance, a report by the World Federation of the Deaf highlights that over 70 million people around the world use sign language as their primary means of communication, underscoring the significance of providing accessible media that includes sign language translation [3]. As a result, it is critical to go beyond insufficient accessibility measures and incorporate sign language interpretations in video content to meet the needs of people who are deaf or hard of hearing.

This paper explores the traditional methods of integrating sign language into video content, the limitations of these methods, and how modern technology, particularly artificial intelligence (AI), can revolutionize this process. We propose a set of innovative web, video, and PDF plugins that allow for the real-time generation of sign language interpretations synchronized with subtitles. This system provides an affordable, sustainable solution that can adapt to video updates, ensuring that deaf and hard-of-hearing users have continuous access to fully accessible content.

Methods

The process of developing a fully accessible video content platform for the deaf and hard of hearing requires overcoming several challenges, both technical and practical. Traditionally, the inclusion of sign language interpretation in videos involves a multi-step process, which starts with the script being translated into sign language by a trained interpreter. This process necessitates a professional studio setup where the interpreter’s video is recorded, edited, and then embedded into the original video content. Each time the video or subtitles are updated, the sign language video must also be redone, which is both time-consuming and expensive. This method limits the scalability and feasibility of adding sign language support to a wide range of video content.

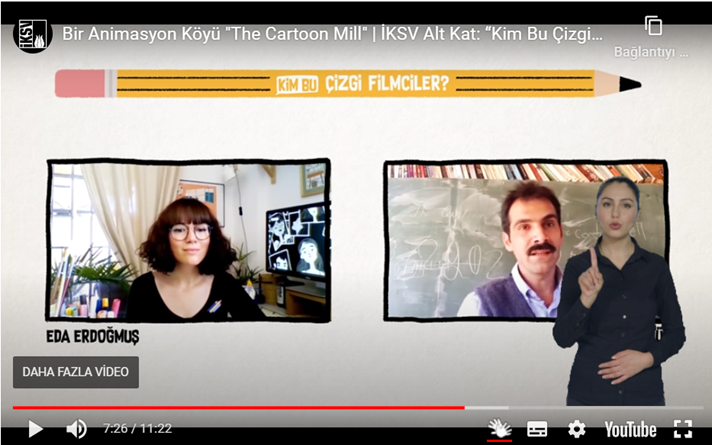

This approach transforms this process by utilizing artificial intelligence (AI) and advanced subtitle synchronization techniques. The system we developed extracts information from the subtitle file of the video and uses it to generate real-time, frame-by-frame sign language interpretations. This eliminates the need for costly re-recordings or post-production editing whenever changes are made to the video content or subtitles (Figure 1).

Figure 1. A screenshot from Istanbul Arts and Culture Foundation’s video, using SignForDeaf’s Video Sign Language Translation Plugin from their website (https://www.iksv.org/tr/haberler/iksv-alt-kat-yepyeni-bir-cevrimici-seriye-basliyor-kim-bu-cizgi-filmciler).

The core of this solution lies in its ability to sustainably translate updates from subtitles into sign language, providing a continuous and accurate interpretation that is synchronized with the video. The integration of this system with existing video platforms, such as YouTube, is simple and requires no modification to the original video file. Instead, the plugin acts as an overlay, allowing users to activate, move, resize, or disable the sign language window based on their preferences. This user-friendly feature ensures that the system can be tailored to the specific needs of each viewer, enhancing accessibility without compromising the visual integrity of the video.

Additionally, the plugin is designed with future adaptability in mind. As new sign languages are added, the system can easily be updated to accommodate different regions and languages, ensuring its applicability in diverse linguistic contexts. The modular design also allows for further AI advancements, such as improvements in real-time language recognition and varied interpretations of complex sentence structures, to be incorporated without requiring major changes to the existing framework.

Automatic Subtitle Synchronization

One of the most innovative aspects of our plugin is its ability to utilize artificial intelligence (AI) to synchronize subtitles with the appropriate sign language translation. In traditional systems, sign language videos must be carefully timed and manually synchronized with the content of the video, which is a tedious process, especially when dealing with videos that are frequently updated. Each time a new subtitle is added or modified, a complete re-recording of the sign language translation is required, followed by a re-integration of the video, which consumes both time and resources.

SignForDeaf’s AI-powered solution dynamically adjusts to any changes made in the subtitle files, automatically updating the corresponding sign language translation without the need for manual intervention. This feature allows for synchronization that responds to frequent updates, and the level of automation not only ensures accuracy but also provides the ability to handle large volumes of content, making it scalable for organizations that produce frequent and varied video outputs, a feature that previous solutions lack.

Customizable Sign Language Window

A key feature that sets our plugin apart is the fully customizable sign language window. Accessibility solutions are often criticized for being rigid, but this system prioritizes user experience in the design of the Video Sign Language Translation system. Viewers have complete control over the display of the sign language window, ensuring that it can be adapted to their individual preferences. This customization includes options to move, resize, or even disable the window as needed, giving users the flexibility to adjust the display based on their viewing environment and personal comfort. For example, a user watching a video on a small screen, such as a mobile phone, may prefer to reduce the size of the sign language window or move it to a corner of the screen where it does not obscure important visual elements. Conversely, a user watching on a larger screen may choose to enlarge the window for greater visibility.

This user-centered approach ensures that the sign language interpretation does not interfere with the main video content, while still remaining easily accessible as needed. Additionally, users can enable or disable the sign language feature at any time, ensuring that those who choose to disable it can watch the video without distractions. This flexibility can significantly improve the accessibility and inclusiveness of video content, particularly for the deaf and hard of hearing communities.

Reduced Time and Cost

Traditional methods of adding sign language interpretation to videos are often prohibitively expensive and time-consuming. The process typically involves hiring a professional sign language interpreter, recording their translation in a studio, and then integrating the sign language video into the main content through post-production editing. This method requires significant human and financial resources, which can make it unfeasible for small content creators or organizations with limited budgets. Furthermore, any changes to the original video or subtitle text would require repeating this entire process, resulting in cost and time losses.

Our plugin eliminates these challenges by removing the need for constant reshooting and manual integration. Once the plugin is set up, the sign language interpretation is automatically generated based on the subtitles, meaning that any changes to the subtitles are immediately reflected in the sign language translation. This drastically reduces both time and cost losses required to maintain accessible video content, enabling more creators, educators, and organizations to provide sign language support without the financial burden. For instance, in the context of a large educational institution or media platform, where hundreds of videos may be produced each month, the time savings can be substantial, allowing resources to be allocated toward creating new content or improving other accessibility features instead.

Additionally, the reduced cost of implementation means that smaller organizations or independent creators, who previously might have been unable to afford sign language support, can now offer fully accessible videos. This broadens the reach of accessible content across different platforms and industries, from educational videos and online courses to entertainment and corporate training materials. Ultimately, this not only benefits the deaf and hard-of-hearing community by providing more inclusive content, but also encourages a more widespread adoption of accessibility practices across the media landscape.

Conclusion

The implementation of AI-powered sign language translation systems represents a significant advancement in making digital content more accessible to deaf and hard-of-hearing communities. By addressing the limitations of traditional subtitle-based accessibility, this solution offers a more inclusive, dynamic option to provide synchronized sign language interpretation. Not only does this approach alleviate the time and financial burdens typically associated with creating sign language videos, but it also ensures that accessibility features can be easily updated as content evolves. This is particularly important in educational, entertainment, and professional contexts, where content is frequently refreshed and updated.[2]

Furthermore, this system opens new possibilities for the future of accessibility in media. As AI continues to advance in areas like NLP and gesture recognition, we can expect more sophisticated and contextually accurate translations, potentially bridging gaps between different sign languages and spoken languages worldwide.[5] This could be transformative not only for the deaf and hard-of-hearing community but for society as a whole, as it promotes inclusivity and breaks down communication barriers across linguistic and cultural lines.[4]

By integrating AI-powered sign language translation into mainstream video platforms, content creators and organizations have the opportunity to significantly improve the viewing experience for their audiences. The customizable, user-friendly nature of the Video Sign Language Plugin allows individuals to tailor their accessibility experience, making it a versatile tool that can accommodate a wide range of personal preferences and needs. Moreover, as more organizations adopt these technologies, we can anticipate a broader cultural shift toward the normalization of accessibility in digital content, benefiting not only the deaf and hard-of-hearing community but also society’s collective understanding of inclusivity.[6]

In conclusion, the widespread adoption of AI-powered sign language translation tools has the potential to reshape the video landscape of the web, making digital content accessible to all. It is a solution that not only addresses current accessibility challenges but also paves the way for future advancements, ensuring that as technology progresses, no one is left behind.

Acknowledgments

We would like to thank our colleagues and research partners for their invaluable contributions to this project.

References

- Wilson, M., & Moffat, P. (2018). The impact of subtitles and sign language on video accessibility. Journal of Deaf Studies and Deaf Education, 23(2), 204-215. doi:10.1093/deafed/eny012

- Arfé, B., Rossi, C., & Sicoli, S. (2014). The role of sign language in reading comprehension for deaf individuals. Frontiers in Psychology, 5, 1174. doi:10.3389/fpsyg.2014.01174

- World Federation of the Deaf (2021). Global accessibility report on sign language use in media. WFD Publications, pp. 12-36.

- Liddell, S. K. (2003). Grammar, Gesture, and Meaning in American Sign Language. Cambridge University Press, pp. 45-89. ISBN: 9780521016505

- Berke, J. (2020). AI and Accessibility: Bridging the communication gap for the deaf community. AI Journal of Linguistics, 15(3), 235-250. doi:10.1111/ail.153235

- Napier, J., Leigh, G., & Goswell, D. (2016). Sign Language Interpreting: Theory and Practice in Australia and New Zealand. Federation Press, pp. 102-145. ISBN: 9781760021162