Moving Beyond Limits: ALMOURAFEK’s Trailblazing Innovation for the Visually Impaired

Research article  Open access |

Available online on: 16 September, 2025 |

Last update: 16 September, 2025

Open access |

Available online on: 16 September, 2025 |

Last update: 16 September, 2025

Abstract-

Recent advancements in technology have prompted innovative solutions in the field of navigation assistance for visually impaired individuals. Despite these strides, challenges persist in implementing autonomous navigation technologies due to accuracy, cost, and dependency on internet connectivity. This paper introduces ‘ALMOURAFEK,’ an ingenious smart, wearable device meticulously crafted with a fully embedded AI solution for indoor scene analysis and interpretation. It transforms images of indoor environments into actionable insights, formulating them into verbal messages directly transmitted by BT to the user in their preferred language and dialect. The paper details ‘ALMOURAFEK’s’ software, hardware, and design intricacies, exemplifying the device’s exceptional embedded AI integration. A critical comparative analysis then scrutinizes ALMOURAFEK against existing solutions, highlighting its prowess in addressing limitations prevalent in the market.

Keywords- Visual Impairment; Navigation Assistance; Wearable Device; Embedded AI Solution; Scene Interpretation.

1. Introduction

In recent years, the field of navigation assistance for visually impaired individuals has witnessed the emergence of various technological solutions such as Lidar sensors, voice recognition systems, and image processing algorithms. However, implementing these technologies for autonomous navigation among visually impaired individuals poses notable challenges related to accuracy, cost, and ease of use. Moreover, the reliance of most existing systems on an Internet connection restricts their functionality in areas lacking connectivity.

This paper introduces ‘ALMOURAFEK,’ a pioneering innovation: a smart, wearable device designed for obstacle and stair detection and recognition. ALMOURAFEK stands out for its multilingual capabilities, user-friendly tool requiring no training, and notably, its capability to operate entirely offline, thereby enhancing performance and ensuring user privacy.

We start by providing a brief survey of the current technological landscape in navigation assistance for the visually impaired. Subsequently, a comprehensive depiction of ‘ALMOURAFEK,’ our innovative solution encompassing software, hardware, and design features, is presented. This is followed by a critical comparative analysis between ALMOURAFEK and existing solutions available in the market. Finally, the paper culminates by highlighting the pivotal role of ALMOURAFEK in revolutionizing navigation assistance technologies for the visually impaired.

2. State of the Art in Navigation Assistance Technologies for the Visually Impaired

According to the World Health Organization’s 2021 statistics, approximately 253 million individuals worldwide are affected by visual impairment (VI), among whom 36 million are blind, and 217 million suffer from low vision. These statistics highlight the pressing need for robust and accessible assistive technologies to enhance the quality of life for visually impaired individuals.

- Evolution of Assistive Solutions

Over the past decade, the development of assistive solutions dedicated to aiding visually impaired individuals in navigation across indoor and outdoor environments has surged significantly. Efforts have focused on bolstering user cognition during navigation and improving overall quality of life. Surveys, such as that conducted by [1, 2], have meticulously assessed wearable and portable assistive devices for the blind and visually impaired, shedding light on strengths and limitations.

- Sensorial/Camera-Based Devices

Despite promising potential, sensorial and camera-based substitution devices have not seen widespread adoption within the visually impaired community. Challenges persist, notably in the inability to develop comprehensive cognitive maps of environments, hindering seamless navigation and integration of these technologies into daily life [3, 4]. Additionally, limitations in accuracy and precision within proposed architectures have posed hurdles [5].

- Mobile Platforms and Cloud-Based Solutions

Recent years have witnessed a proliferation of research dedicated to sensorial substitution for human vision, often leveraging mobile platforms and cloud resources to offer a myriad of assistive applications. However, reliance on internet connectivity and limited testing in controlled laboratory environments have restrained their societal integration and usability [6].

- Hybrid Technologies for Enhanced Navigation

To bridge the gap between current assistive technologies and human-level semantic content understanding, a proposition emerges: a hybridization of technologies, merging computer vision with voice recognition capabilities integrated into wearable devices. Such integration aims to offer a versatile, hands-free, and universally accepted assistive device within the visually impaired community [7, 8].

- Wayfinding Technologies in Smart Cities

Inclusive design principles in smart cities emphasize the importance of accessible wayfinding technologies for individuals with disabilities. Leveraging indoor and outdoor navigation solutions, public spaces, including transport hubs, entertainment centers, and tourist attractions, are increasingly adapting to ensure inclusivity and accessibility [9, 10].

- Challenges Faced by Wearable Assistive Devices

Wearable assistive devices have historically encountered multifaceted challenges, hindering their widespread adoption among visually impaired individuals. Complex operational procedures, high costs, and limitations in real-world effectiveness have posed substantial barriers to their integration into daily life [1, 12]. Moreover, socio-psychological factors, including hesitance towards embracing new technologies, have impeded user acceptance.

A prominent challenge prevalent in existing solutions is their dependency on internet connectivity. This reliance restricts user liberty and privacy, as constant connectivity may not always be feasible or preferred when navigating various environments. This constraint has not only posed practical limitations but also raised concerns about the privacy and autonomy of users, potentially impeding the seamless adoption of such technologies into everyday life.

3. ALMOURAFEK: Wearable Device with fully embedded AI solutions for the Visually Impaired

ALMOURAFEK stands as a groundbreaking solution designed to address the challenges faced by over 253 million visually impaired individuals worldwide. While the majority still rely on white canes, they remain susceptible to collisions, stair accidents, and disorientation. Meanwhile, a minority with access to technology, notably SmartPhones, encounter usability complexities, requiring both mastery and internet connectivity.

To bridge these gaps, ALMOURAFEK provides a comprehensive, secure solution. Our system utilizes a TOF camera for real-time environmental capture, integrating tailored artificial intelligence to detect various obstacles. Crucial information is then relayed to users in their preferred language and dialect. ALMOURAFEK’s key advantage lies in its operation without internet, ensuring user safety and independence, even in connectivity-deprived zones. Furthermore, its ease of use requires no mastery: simply activate and listen.

ALMOURAFEK: A Pioneering Intelligent Wearable Device

“ALMOURAFEK” is a wearable smart device equipped with specialized artificial intelligence to analyze and interpret a visually impaired individual’s surrounding scene. It processes vital information and transmits it verbally to the user via a BT earpiece. ALMOURAFEK excels in detecting and recognizing components in both indoor and outdoor scenes. With multilingual capabilities and user-friendly operation requiring no training, it offers the option to function without internet connection, ensuring improved system performance, functionality anywhere, and user privacy.

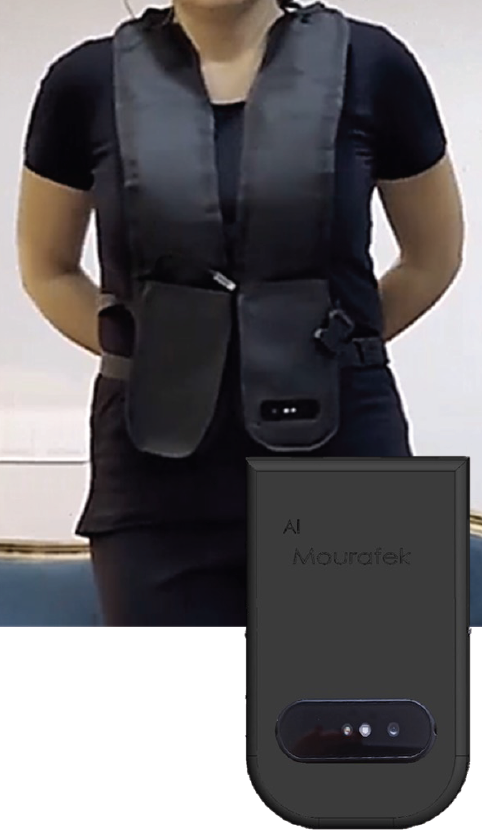

The device is equipped with a TOF camera, a Bluetooth earpiece and a rechargeable battery (refer to Figure 1). ALMOURAFEK boasts convenient weight and dimensions.

Figure 1. ALMOURAFEK basic device.

Figure 1. ALMOURAFEK basic device.

- Accessories for Enhanced User Experience

Almourafek is designed to offer a simple, intuitive, and completely hands-free user experience. The device is worn discreetly as a pendant or can be attached to accessories like a scarf (refer to Figure 2). It requires no physical manipulation during use, allowing the user to fully focus on their movements. The user interface is activated by a simple button, and voice feedback is transmitted using a Bluetooth earpiece.

Figure 2. ALMOURAFEK being worn on a scarf.

- A User-Centric Development Approach

Our device heavily relies on our innovative algorithms that have proven to be extremely revolutionary through multiple papers we’ve authored [13-19]. Our algorithms play a huge part in offering us a significant competitive advantage as we’re able to efficiently and rapidly detect all kinds of obstacles, especially up and down stairs and suspended obstacles that often represent a huge risk and problem even with the currently available solutions.

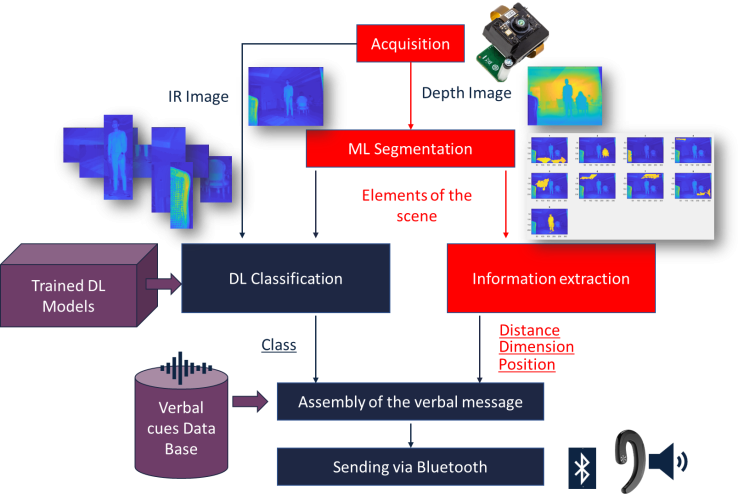

The innovative approach adopted relies on the use of a Time-of-Flight (TOF) camera to capture real-time surroundings for VI individuals. Our system leverages advanced image processing and artificial intelligence (AI) techniques to analyze and interpret this visual and depth data.

The entire surrounding scene is acquired using the TOF camera, with a field of view of 86°*68° and a range of 6 meters, providing two types of images: an infrared image (IR) and a depth image (Depth). Then, computer vision algorithms and deep learning come into play to segment the scene and identify its different elements respectively, such as obstacles, open pathways, objects, and others. Indeed, each image type undergoes specific parallel processing as shown in Figure 3.

To the best of our knowledge, ALMOURAFEK stands as a unique tool capable of conducting real-time offline scene analysis, providing essential functionalities for the visually impaired, including obstacle detection and scene description. It not only ensures their safety but also establishes itself as a genuine companion, transcending mere obstacle detection to offer comprehensive assistance.

Figure 3. Flowchart of our Computer Vision Approach for Real-Time Scene Understanding and Interpretation.

Figure 3. Flowchart of our Computer Vision Approach for Real-Time Scene Understanding and Interpretation.

Finally, the development and design of our product stemmed from a thorough study of the needs of visually impaired individuals, ensuring ALMOURAFEK stands as the perfect companion for the visually impaired.

4. Conclusion

Underpinning the challenges in existing navigation aids for the visually impaired, this study introduced ALMOURAFEK, a paradigm-shifting wearable device embedded with advanced artificial intelligence and Time-of-Flight (TOF) camera technology. ALMOURAFEK’s operational independence from internet connectivity ensures enhanced safety and privacy while offering real-time scene analysis and multilingual assistance.

This innovative device, designed through a user-centric approach, not only addresses the limitations of current aids but also showcases adaptability by being worn on scarves or integrated into white cane extensions.

By amalgamating cutting-edge science with user-oriented design, ALMOURAFEK embodies the future of assistive technologies, promising greater independence, societal inclusion, and an elevated quality of life for the visually impaired on a global scale. Its scientific advancements signify a transformative shift in the domain of navigation aids, heralding an era of empowerment and accessibility for millions.

Acknowledgments

The authors would like to express their sincere gratitude to ICUBE and Novation City for their valuable support in the early stages of the ALMOURAFEK prototype development. Special thanks also go to the Mada Innovation Program 2023, whose funding and guidance enabled the successful realization of the latest version of the prototype, making it possible to validate the proposed solution in real-world scenarios.

References

[1] Ruxandra, T., Bogdan, M., and Titus, Z. (2018)

Wearable assistive devices for visually impaired: a state-of-the-art survey. Pattern Recognition Letters 2018

[2] Khan, S., Nazir, S. and Khan, H. (2021). Analysis of Navigation Assistants for Blind and Visually Impaired People: A Systematic Review. IEEE Access. 9. 10.1109/ACCESS.2021.3052415.

[3] S. A. Cheraghi, V. Namboodiri, and L. Walker, “GuideBeacon: Beaconbased indoor wayfinding for the blind, visually impaired, and disoriented,” in Proc. IEEE Int. Conf. Pervas. Comput. Commun. (PerCom), Mar. 2017, pp. 121-130.

[4] J. Ma and J. Zheng, (2017)

High precision blind navigation system based on haptic and spatial cognition, in Proc. 2nd Int. Conf. Image, Vis. Comput. (ICIVC), Jun. 2017, pp. 956-959.

[5] J. P. Gomes, J. P. Sousa, C. R. Cunha, and E. P. Morais, (2018)

An indoor navigation architecture using variable data sources for blind and visually impaired persons, in Proc. 13th Iberian Conf. Inf. Syst. Technol. (CISTI), Jun. 2018, pp. 1-5.

[6] M. Nakajima and S. Haruyama, (2012)

Indoor navigation system for visually impaired people using visible light communication and compensated geomagnetic sensing, in Proc. 1st IEEE Int. Conf. Commun. China (ICCC), Aug. 2012, pp. 524-529.

[7] G. Yang and J. Saniie, “Indoor navigation for visually impaired using AR markers,” in Proc. IEEE Int. Conf. Electro Inf. Technol. (EIT), May 2017, pp. 1-5.

[8] C. Manlises, A. Yumang, M. Marcelo, A. Adriano, and J. Reyes, “Indoor navigation system based on computer vision using CAMShift and D* algorithm for visually impaired,” in Proc. 6th IEEE Int. Conf. Control Syst., Comput. Eng. (ICCSCE), 2016, pp. 481-484.

[9] van der Bie, J., Ben Allouch, S., and Jaschinski, C. (2019).

Communicating Multimodal Wayfinding Messages for Visually Impaired People via Wearables, in Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services (New York City, NY: Association for Computing Machinery), 1–7.

[10] Shahbaz, A., (2023)

Wayfinding and indoor navigation for persons with visual impairments: an overview of support innovations by Mada, (2023), Nafath-Issue 22, CC BY-NC 4.0.

[11] T. H. Riehle, S. M. Anderson, P. A. Lichter, W. E. Whalen, and N. A. Giudice, (2013)

Indoor inertial waypoint navigation for the blind, in Proc. 35th Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. (EMBC), Jul. 2013, pp. 5187-5190.

[12] U. Yayan, F. Inan, F. Guner, U. G. Partal, A. Kale, and A. Yazici, (2015)

Indoor mobile navigation software for blind people,” in Proc. 23nd Signal Process. Commun. Appl. Conf. (SIU), May 2015, pp. 666-669.

[13] Medhioub, M., Khanfir Kallel, I., Ammar Bouhamed, S., Derbel, N., Solaiman, B.and Kanoun, O. (2021),

Electronic embedded system for stair recognition based on possibilistic modeling of ultrasonic signal, IEEE Sensors Journal, Volume: 21, Issue: 5, pp: 5787 – 5797 March1, 1 2021.

[14] Charfi, A., Ammar Bouhamed, S., Bossé, E., Khanfir Kallel, I., Bouchaala, W., Solaiman, B. And Derbel, N. (2020)

Possibilistic Similarity Measures for Data Science and Machine Learning Applications, IEEE Access 8: 49198-49211.

[15] S. Ammar Bouhamed, I. Khanfir Kallel, R. R. Yager, É. Bossé and B. Solaiman, (2020)

An intelligent quality-based approach to fusing multi-source possibilistic information”, Information Fusion, Volume 55C, 2020, Pages 68-90.

[16] I. Khanfir Kallel, S. Almouahed, B. Alsahwa and B.Solaiman, (2019)

The use of contextual spatial knowledge for Low-quality Image Segmentation”, Multimedia Tools and Applications, 78: 9645.

[17] J. Frikha Elleuch, D. Sellami and I. Khanfir Kallel, (2016)

Indoor/ outdoor navigation system based on possibilistic traversable area segmentation for visually impaired people”, Electronic Letters on Computer Vision and Image Analysis 15(1), pp.60-76.

[18] J. Frikha Eleuch, I. Khanfir Kallel, D. Sellami Masmoudi, (2013)

New ground plane segmentation method for electronic cane”, Journal of Image and Graphics, (JIG), Vol. 1, No. 2, pp. 72-75.

[19] S. Ammar Bouhamed, I. Khanfir Kallel, D. Sellami Masmoudi. (2013)

New electronic white cane for stair case detection and recognition using ultrasonic sensor”, International Journal of Advanced Computer Science and Applications, (IJACSA), Vol. 4, No. 6, pp. 243-256.